As a sampling of our work and our approach, we spotlight here the ongoing projects of some of our graduate researchers.

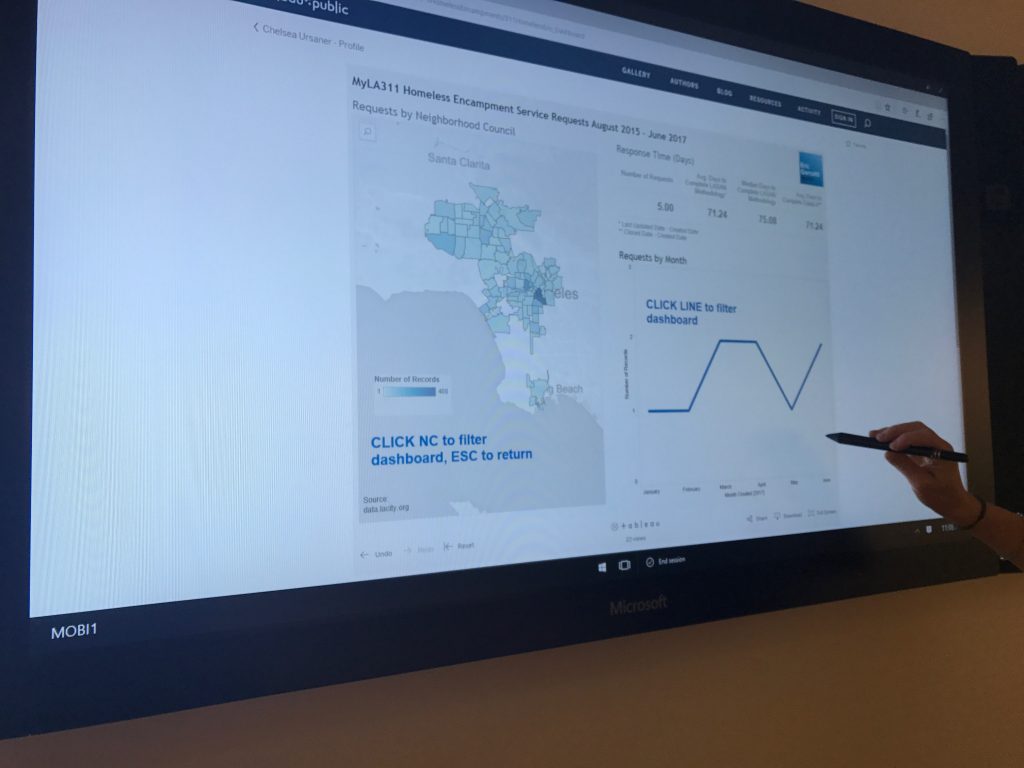

As cities race to rebrand themselves as “smart”, data and data tools have become indispensable parts of urban government. But what does this imply for the day to day activities of both government workers and city residents? Leah Horgan’s research explores the narratives and practices of data in city infrastructures. In their ethnographic research, Leah has spent several years working alongside the teams in city government charged with implementing data-centered visions.

As Leah’s research shows, data is never cut-and-dried. Contests around data — around whose data matters, around who has responsibility for it, or around what data really says — reconfigure the relationships between people and the city, or between departments in city government. Data does not speak for itself, but has to be “put to work”; it needs to accommodate institutional and political realities in order to achieve effective outcomes. Data-driven governance creates new alliances amongst government departments, tech firms, and community groups, which reshape the visions of urban futures. At the same time, those aspects of city life that are not easily captured by data run the risk of disappearing from view, and different communities are differentially enrolled in data projects or included in smart visions.

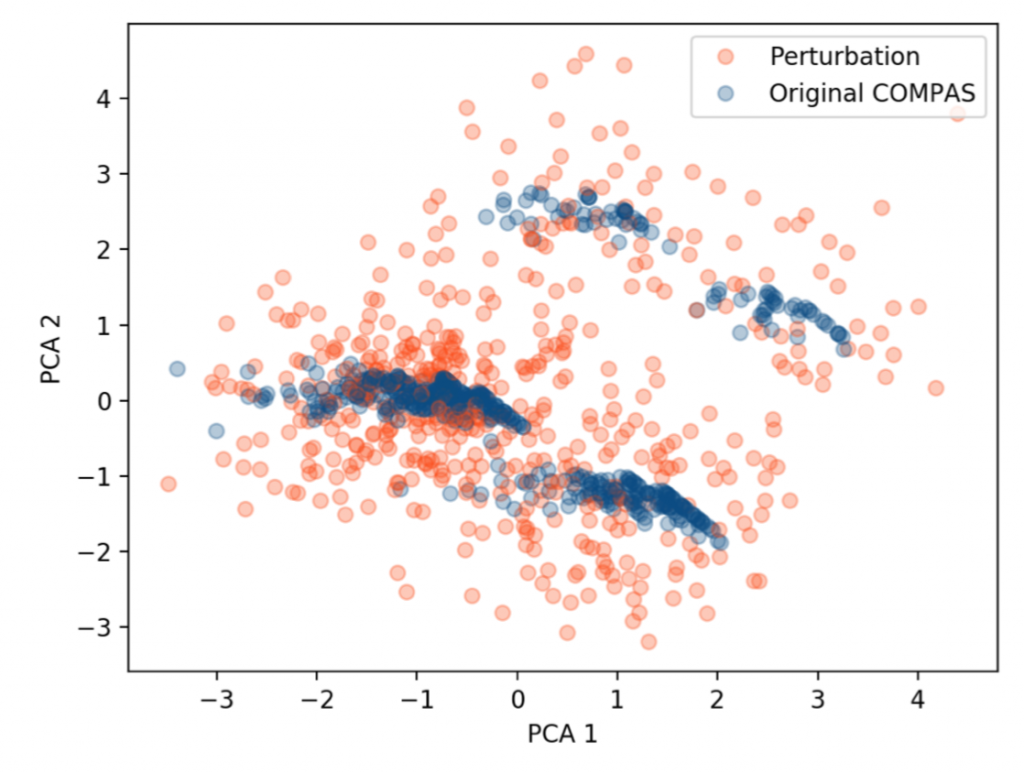

Data is becoming increasingly central to our everyday lives, but the increased mediation of all domains of sociality by technologies of automated capture, aggregation, and analysis carries real risks, especially for racialized and minoritized communities. How might we more responsibly design, build, adopt—or, conversely, refuse—the data-intensive computational infrastructures to which we have delegated important decisions? Doctoral students Lucy Pei and Benedict Olgado have been working on a research project that uses the emergent research paradigm of data justice (data + social justice) to find out.

In addition to their own individual research projects, Bono and Lucy ran a year-long research practicum that brought together faculty, graduate students, and undergraduate researchers to understand how organizations that serve minoritized communities conceptualize and utilize data in pursuing justice across various domains. They created over 75 case studies and conducted interviews with community leaders to understand the many ways that minoritized communities resist data-intensive technologies, even as they seek to mobilize the power of data for community-defined goals. Bono and Lucy are working at the intersection of inequality and technological change by insisting on community-informed ways of thinking about data.

Despite efforts by tech companies to eliminate hiring bias and improve workforce diversity, the tech workforce remains largely homogenous. We know that gender and race matter for employers’ hiring decisions, but it is unclear how social class background influences their assessments. Through interviewing employers and Ph.D.-level internship applicants, Phoebe Chua’s research examines the role of social class in shaping the hiring process.

One might think that Ph.D. students at elite universities like MIT would have an easy time getting internships because of robust access to social networks and insider knowledge about hiring expectations. Phoebe’s early findings challenge this assumption. Even at the elite Ph.D.-level, she finds that students’ experiences of the hiring process can vary with social class. In contrast to their upper-middle-class counterparts, students from less class-privileged backgrounds often describe the process as emotionally exhausting. This is because employers’ hiring expectations often privilege upper-middle-class ways of interacting with interviewers and leveraging social connections. These upper-middle-class ways may clash with the values of the less class-privileged students. Overall, Phoebe’s research contributes an understanding of the subtle social class biases underlying the hiring process and strategies for companies to implement equitable hiring practices.

In the summer of 2022, we funded several graduate fellows in support of their research. Richard Martinez worked with students from the Homeboy Art Academy, all high-risk youth, to explore their experience of and attitudes towards wearable, mixed-reality technology; you can read his report here. Jina Hong investigated questions of technological resiliency from the perspective of people with responsibilities for maintenance and repair; you can read her report here. Sam Carter uses film and TV production as a site and method to examine the limits on the participation in cultural production faced by under-represented groups; you can read her report here.